| LTM3D: Bridging Token Spaces for Conditional 3D

Generation with Auto-Regressive Diffusion Framework

|

|

Xin Kang

1,2,

Zihan Zheng

1,2,

Lei Chu

2,

Yue Gao

2,

Jiahao Li

2,

Hao Pan

3,

Xuejin Chen

1,

Yan Lu

2

|

|

1University of Science and Technology of China,

2Microsoft Research Asia,

3Tsinghua University,

|

| |

|

|

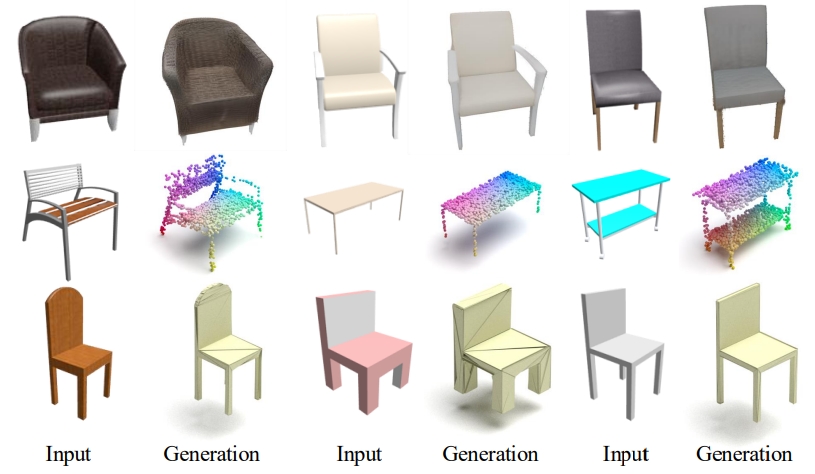

LTM3D is a conditional 3D shape generation framework that supports image and text inputs, as

well as multiple 3D data

representations, including signed distance fields, 3D Gaussian Splatting, point clouds, and

meshes.

|

| Abstract |

|

We present LTM3D, a Latent Token space Modeling framework for conditional 3D shape generation

that integrates the strengths of diffusion and auto-regressive (AR) models. While

diffusion-based methods effectively model continuous latent spaces and AR models excel at

capturing inter-token dependencies, combining these paradigms for 3D shape generation remains a

challenge. To address this, LTM3D features a Conditional Distribution Modeling backbone,

leveraging a masked autoencoder and a diffusion model to enhance token dependency learning.

Additionally, we introduce Prefix Learning, which aligns condition tokens with shape latent

tokens during generation, improving flexibility across modalities. We further propose a Latent

Token Reconstruction module with Reconstruction-Guided Sampling to reduce uncertainty and

enhance structural fidelity in generated shapes. Our approach operates in token space, enabling

support for multiple 3D representations, including signed distance fields, point clouds, meshes,

and 3D Gaussian Splatting. Extensive experiments on image- and text-conditioned shape generation

tasks demonstrate that LTM3D outperforms existing methods in prompt fidelity and structural

accuracy while offering a generalizable framework for multi-modal, multi-representation 3D

generation.

|

|

|

Paper [PDF]

Code and data [Coming Soon]

Citation

@misc{kang2025ltm3dbridgingtokenspaces,

title={LTM3D: Bridging Token Spaces for Conditional 3D Generation with Auto-Regressive

Diffusion Framework},

author={Xin Kang and Zihan Zheng and Lei Chu and Yue Gao and Jiahao Li and Hao Pan and

Xuejin Chen and Yan Lu},

year={2025},

eprint={2505.24245},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2505.24245},

}

|

|

| |

| Algorithm pipeline |

|

|

Our framework LTM3D consists of three components. In Conditional Distribution Modeling, we

implement an auto-regressive diffusion backbone that learn inter-token dependencies with a

Masked Auto-Encoder and learn conditional token distributions with an MLP-based DenoiseNet.

In Prefix Learning, we use a cross-attention layer and a feed-forward layer to project condition

tokens into prefix tokens. In Latent Token Reconstruction, we use a cross-attention layer and

$D$ self-attention layers to reconstruct shape tokens from input condition tokens. Note that

Latent Token Reconstruction module is trained separately with a reconstruction loss.

|

| |

| Results |

|

|

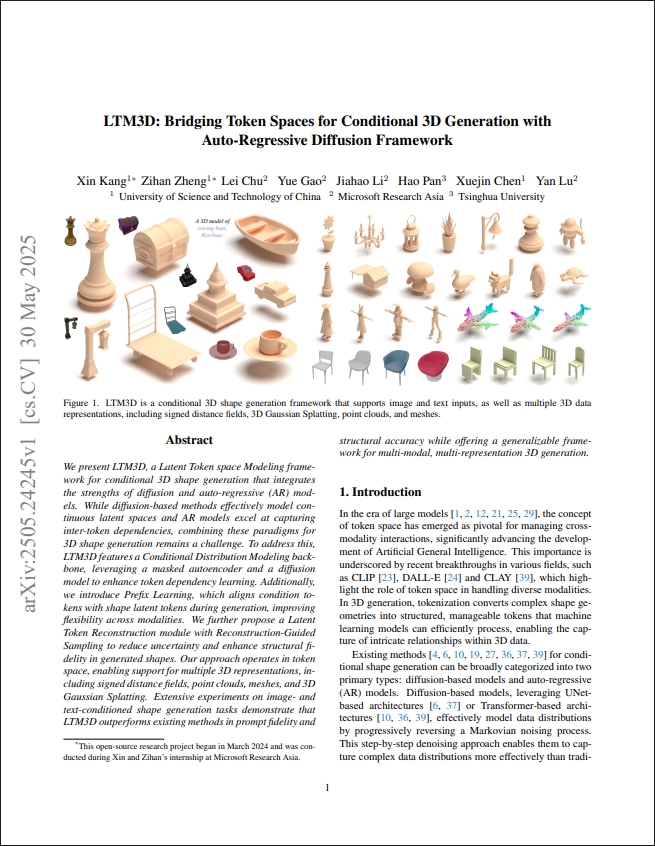

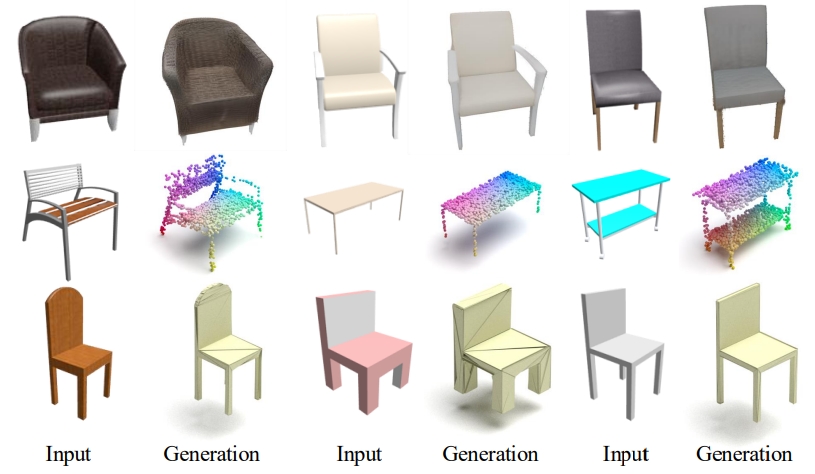

Image-conditioned generation results for SDF data on Objaverse. Each pair consists of an input

image (left) and the corresponding generation result (right).

|

| |

|

|

Image-conditioned generation results for SDF data on ShapeNet. Each pair consists of an input

image (left) and the corresponding generation result (right).

|

| |

|

|

Qualitative image-conditioned generation results on ShapeNet using various 3D representations

including 3D Gaussian Splatting, point cloud and mesh.

|

| |

| Ethics statement |

|

This work is conducted as part of a research project. While we plan to share the code and

findings to promote transparency and reproducibility in research, we currently have no plans to

incorporate this work into a commercial product. In all aspects of this research, we are

committed to adhering to Microsoft AI principles, including fairness, transparency, and

accountability.

|

| |

| |

| |

| ©Lei Chu. Last update: 3 Jun, 2025. |